Car companies have developed a design for universal data format that will allow for standardised vehicle data exchange, making the crowd sourcing paradigm available for self-driving automobiles. Sharing data such as real-time traffic, weather and parking spaces will be much faster and easier. The design is called sensor ingestion interface specification (SENSORIS), and it has been picked up by ERTICO – ITS Europe. It is a UK body overseeing the development of globally-adopted standards related to future automotive and transportation technologies.

The aim is to define a standardised interface for exchanging information between in-vehicle sensors and a dedicated Cloud as well as between Clouds that will enable broad access, delivery and processing of vehicle sensor data, enable easy exchange of vehicle sensor data between all players and enable enriched location based services that are key for mobility services as well as for automated driving.

Currently, 11 major automotive and supplier companies have signed up for SENSORIS innovation platform. These are AISIN AW, Robert Bosch, Continental, Daimler, Elektrobit, HARMAN, HERE, LG Electronics, NavInfo, PIONEER and TomTom. Pooling analogous vehicle data from millions of vehicles around the world will allow for a fully-automated driving experience. Each vehicle will have access to near-real-time info of road conditions, traffic data and various hazards that will help these self-driving vehicles make better decisions on the road.

Many of today's futuristic projects—from self-driving cars to advanced robots to the Internet of Things (IoT) applications for smart cities, smart homes and smart health—rely on data from sensors of various kinds. To achieve machine learning and other complex technical goals, enormous quantities of data must be gathered, synthesised, analysed and turned into action, in real time.

A self-driving car uses complex image recognition and a radar/lidar system to detect objects on the road. The objects could be fixed (curbs, traffic signals, lane markings) or moving (other vehicles, bicycles, pedestrians). The scope involves validating the data of more than 1000 hours of car camera and radar/lidar recordings. At three frames per second, there are almost 11 million images to analyse and correct, which is too many to do using off-the-shelf tools.

Architecture for a tool enabling the car system is meant to validate large amounts of information provided by real, direct observations—also known as ground-truth data. Creation of the tool provides a way to validate the accuracy of sensor-derived data, and to make sure that the car would respond appropriately to actual objects and events, and not to sensor artefacts.

The future self-driving vehicle value chain will be driven by software feature sets, low system costs plus high-performance hardware. Simulation software based on agent based modelling methodology, which is used to create real-world driving scenarios to test complex driving situations for autonomous vehicles in agent based simulation in terms of advancing virtual testing and validation, will be used to test future autonomous vehicle concepts. This method of testing puts agents (vehicles, people, infrastructure) with specific driving characteristics (such as selfish, aggressive, defensive) with their connections in a defined environment (cities, test tracks, military installations) to understand complex interactions that occur during simulation testing.

Benefits to car manufacturers and their suppliers that this approach aims to deliver are faster product development cycles, reduced costs related to test vehicle damage and lower risk of harming a vehicle occupant under test conditions.

The goal has always been to find a home for this specification that is open, global and accessible to all. This is a vital step on the path to creating a shared information network for safer roads. If a car around the next corner hits the brakes because there is an obstruction, that information could be used to signal to the drivers behind to slow down ahead of time, resulting in smoother, more efficient journeys and lower risk of accidents. But that can only work if all cars can speak and understand the same language.

SENSORIS

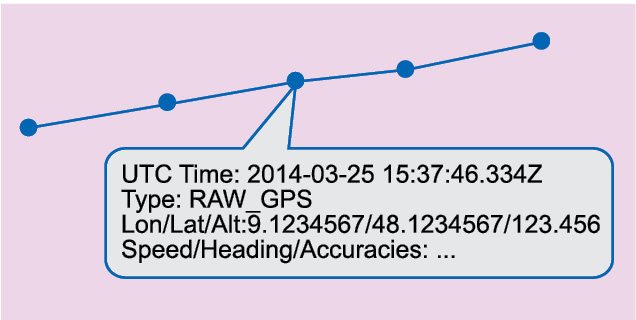

Vehicles driving on the road are equipped with a magnitude of sensors. This sensor data may be transferred over any kind of technology from the vehicle to an analytic processing backend. Between individual vehicles and the analytic processing backend, an OEM or system vendor backend may be located as a proxy.

The sensor data interface specification defines the content of sensor data messages and their encoding format(s) as these are submitted to the analytic processing backend. However, the specification may be used between other components as well. Sensor data is submitted as messages with various types of content. It is common to all kinds of submitted messages as these are related to one or multiple locations.

Fig. 1: Paths

Sensor data messages may be time-critical and submitted near-real-time but also may be of informational value and submitted with an acceptable delay accumulated within other data. Neither priority nor requirements on latency are part of the specification. The content and format of sensor data messages is independent of submission latency (in near-real-time or delayed).

The post Self Driving Cars Platform and Their Trends (Part 1 of 2) appeared first on Electronics For You.

No hay comentarios:

Publicar un comentario